From developing

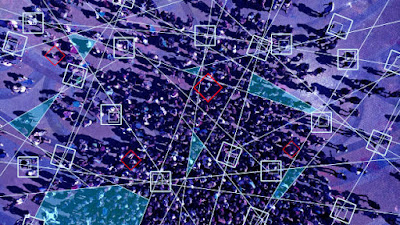

single autonomous agents to building groups of distributed autonomous agents

that coordinate themselves, distributed intelligence is the obvious next step.

A multi-agent system is made up of many agents.

Communication is a prerequisite for cooperation.

The fundamental concept is to allow for distributed

problem-solving rather than employing a collection of agents as a simple

parallelization of the single-agent technique.

Agents effectively cooperate, exchange information, and

assign duties to one another.

Sensor data, for example, is exchanged to learn about the

current condition of the environment, and an agent is given a task based on who

is in the best position to complete that job at the time.

Agents might be software or embodied agents in the form of

robots, resulting in a multi-robot system.

RoboCup Soccer (Kitano et al.1997) is an example of this, in which two teams of robots compete in soccer.

Typical challenges include detecting the ball cooperatively

and sharing that knowledge, as well as assigning tasks, such as who will go

after the ball next.

The agent's and the entire approach's complexity may be

reduced by restricting information to the local area.

Regardless of their local perspective, agents may

communicate, disseminate, and transmit information across the agent group,

resulting in a distributed collective vision of global situations.

A scalable decentralized system, a non-scalable

decentralized system, and a decentralized system are three separate concepts of

distributed intelligence that may be used to construct distributed

intelligence.

Without a master-slave hierarchy or a central control

element, all agents in scalable decentralized systems function in equal roles.

Because the system only allows for local agent-to-agent

communication, there is no need for all agents to coordinate with each other.

This allows for potentially huge system sizes.

All-to-all communication is an important aspect of the

coordination mechanism in non-scalable decentralized systems, but it may become

a bottleneck in systems with too many agents.

A typical RoboCup-Soccer system, for example, requires all robots

to cooperate with all other robots at all times.

Finally, in decentralized systems with central components,

the agents may interact with one another through a central server (e.g., cloud)

or be coordinated by a central control.

It is feasible to mix the decentralized and central

approaches by delegating basic tasks to the agents, who will complete them

independently and locally, while more difficult activities will be managed

centrally.

Vehicle ad hoc networks are an example of a use case (Liang et al.2015).

Each agent is self-contained, yet collaboration aids in

traffic coordination.

For example, intelligent automobiles may build dynamic

multi-hop networks to notify others about an accident that is still hidden from

view.

For a safer and more efficient traffic flow, cars may

coordinate passing moves.

All of this may be accomplished by worldwide communication

with a central server or, depending on the stability of the connection, through

local car-to-car communication.

Natural swarm systems and artificial, designed distributed

systems are combined in swarm intelligence research.

Extracting fundamental principles from decentralized

biological systems and translating them into design principles for

decentralized engineering systems is a core notion in swarm intelligence

(scalable decentralized systems as defined above).

Swarm intelligence was inspired by flocks, swarms, and

herds' collective activities.

Social insects such as ants, honeybees, wasps, and termites

are a good example.

These swarm systems are built on self-organization and work

in a fundamentally decentralized manner.

Crystallization, pattern creation in embryology, and

synchronization in swarms are examples of self-organization, which is a complex

interaction of positive (deviations are encouraged) and negative feedback

(deviations are damped).

In swarm intelligence, four key features of systems are

investigated: • The system is made up of a large number of autonomous agents

that are homogeneous in terms of their capabilities and behaviors.

• Each agent follows a set of relatively simple rules

compared to the task's complexity.

• The resulting system behavior is heavily reliant on agent

interaction and collaboration.

Reynolds (1987) produced a seminal paper detailing flocking behavior

in birds based on three basic local rules: alignment (align direction of

movement with neighbors), cohesiveness (remain near to your neighbors), and

separation (stay away from your neighbors) (keep a minimal distance to any

agent).

As a consequence, a real-life mimicked self-organizing

flocking behavior emerges.

By depending only on local interactions between agents, a

high level of resilience may be achieved.

Any agent, at any moment, has only a limited understanding

of the system's global state (swarm-level state) and relies on communication

with nearby agents to complete its duty.

Because the swarm's knowledge is spread, a single point of

failure is rare.

An perfectly homogenous swarm has a high degree of

redundancy; that is, all agents have the same capabilities and can therefore be

replaced by any other.

By depending only on local interactions between agents, a

high level of scalability may be obtained.

Due to the dispersed data storage architecture, there is

less requirement to synchronize or maintain data coherent.

Because the communication and coordination overhead for each

agent is dictated by the size of its neighborhood, the same algorithms may be

employed for systems of nearly any scale.

Ant Colony Optimization (ACO) and Particle Swarm

Optimization are two well-known examples of swarm intelligence in engineered

systems from the optimization discipline (PSO).

Both are metaheuristics, which means they may be used to

solve a wide range of optimization problems.

Ants and their use of pheromones to locate the shortest

pathways inspired ACO.

A graph must be used to depict the optimization issue.

A swarm of virtual ants travels from node to node, choosing

which edge to use next based on the likelihood of how many other ants have used

it before (through pheromone, implementing positive feedback) and a heuristic

parameter, such as journey length (greedy search).

Evaporation of pheromones balances the

exploration-exploitation trade-off (negative feedback).

The traveling salesman dilemma, automobile routing, and

network routing are all examples of ACO applications.

Flocking is a source of inspiration for PSO.

Agents navigate search space using average velocity vectors

that are impacted by global and local best-known solutions (positive feedback),

the agent's past path, and a random direction.

While both ACO and PSO conceptually function in a completely

distributed manner, they do not need parallel computing to be deployed.

They may, however, be parallelized with ease.

Swarm robotics is the application of swarm intelligence to

embodied systems, while ACO and PSO are software-based methods.

Swarm robotics applies the concept of self-organizing

systems based on local information to multi-robot systems with a high degree of

resilience and scalability.

Following the example of social insects, the goal is to make

each individual robot relatively basic in comparison to the task complexity

while yet allowing them to collaborate to perform complicated problems.

A swarm robot can only communicate with other swarm robots

since it can only function on local information.

Given a fixed swarm density, the applied control algorithms

are meant to allow maximum scalability (i.e., constant number of robots per

area).

The same control methods should perform effectively

regardless of the system size whether the swarm size is grown or lowered by

adding or deleting robots.

A super-linear performance improvement is often found,

meaning that doubling the size of the swarm improves the swarm's performance by

more than two.

As a result, each robot is more productive than previously.

Swarm robotics systems have been demonstrated to be

effective for a wide range of activities, including aggregation and dispersion

behaviors, as well as more complicated tasks like item sorting, foraging,

collective transport, and collective decision-making.

Rubenstein et al. (2014) conducted the biggest scientific experiment using swarm robots to date, using 1024 miniature mobile robots to mimic self-assembly behavior by arranging the robots in predefined designs.

The majority of the tests were conducted in the lab, but new

research has taken swarm robots to the field.

Duarte et al. (2016), for example, built a swarm of autonomous surface watercraft that cruise the ocean together.

Modeling the relationship between individual behavior and

swarm behavior, creating advanced design principles, and deriving assurances of

system attributes are all major issues in swarm intelligence.

The micro-macro issue is defined as the challenge of

identifying the ensuing swarm behavior based on a given individual behavior and

vice versa.

It has shown to be a difficult challenge that manifests

itself in both mathematical modeling and the robot controller design process as

an engineering difficulty.

The creation of complex tactics to design swarm behavior is

not only crucial to swarm intelligence research, but it has also proved to be

very difficult.

Similarly, due to the combinatorial explosion of

action-to-agent assignments, multi-agent learning and evolutionary swarm

robotics (i.e., application of evolutionary computation techniques to swarm

robotics) do not scale well with task complexity.

Despite the benefits of robustness and scalability, obtaining

strong guarantees for swarm intelligence systems is challenging.

Swarm systems' availability and reliability can only be

assessed experimentally in general.

~ Jai Krishna Ponnappan

You may also want to read more about Artificial Intelligence here.

See also:

AI and Embodiment.

Further Reading:

Bonabeau, Eric, Marco Dorigo, and Guy Theraulaz. 1999. Swarm Intelligence: From Natural to Artificial System. New York: Oxford University Press.

Duarte, Miguel, Vasco Costa, Jorge Gomes, Tiago Rodrigues, Fernando Silva, Sancho Moura Oliveira, Anders Lyhne Christensen. 2016. “Evolution of Collective Behaviors for a Real Swarm of Aquatic Surface Robots.” PloS One 11, no. 3: e0151834.

Hamann, Heiko. 2018. Swarm Robotics: A Formal Approach. New York: Springer.

Kitano, Hiroaki, Minoru Asada, Yasuo Kuniyoshi, Itsuki Noda, Eiichi Osawa, Hitoshi Matsubara. 1997. “RoboCup: A Challenge Problem for AI.” AI Magazine 18, no. 1: 73–85.

Liang, Wenshuang, Zhuorong Li, Hongyang Zhang, Shenling Wang, Rongfang Bie. 2015. “Vehicular Ad Hoc Networks: Architectures, Research Issues, Methodologies, Challenges, and Trends.” International Journal of Distributed Sensor Networks 11, no. 8: 1–11.

Reynolds, Craig W. 1987. “Flocks, Herds, and Schools: A Distributed Behavioral Model.” Computer Graphics 21, no. 4 (July): 25–34.

Rubenstein, Michael, Alejandro Cornejo, and Radhika Nagpal. 2014. “Programmable Self-Assembly in a Thousand-Robot Swarm.” Science 345, no. 6198: 795–99.