Artificial intelligence and machine learning are influencing our lives in a variety of minor but significant ways right now.

For example, AI and machine learning programs propose content from streaming services like Netflix and Spotify that we would appreciate.

These technologies are expected to have an even greater influence on society in the near future, via activities such as driving completely driverless cars, allowing sophisticated scientific research, and aiding medical breakthroughs.

However, the computers that are utilized for AI and machine learning use a lot of power.

The need for computer power associated with these technologies is now doubling every three to four months.

Furthermore, cloud computing data centers employed by AI and machine learning applications use more electricity each year than certain small nations.

It's clear that this level of energy usage cannot be sustained.

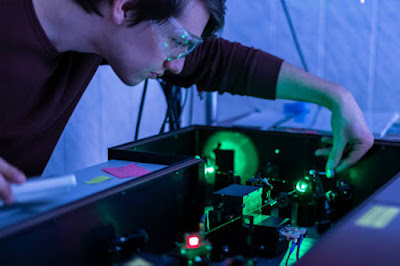

A research team lead by the University of Washington has created new optical computing hardware for AI and machine learning that is far quicker and uses much less energy than traditional electronics.

Another issue addressed in the study is the 'noise' inherent in optical computing, which may obstruct computation accuracy.

The team showcases an optical computing system for AI and machine learning in a new research published Jan.

Science Advances that not only mitigates noise but also utilizes part of it as input to assist increase the creative output of the artificial neural network inside the system.

Changming Wu, a UW doctorate student in electrical and computer engineering, stated, "We've constructed an optical computer that is quicker than a typical digital computer."

"Moreover, this optical computer can develop new objects based on random inputs provided by optical noise, which most researchers have attempted to avoid."

Optical computing noise is primarily caused by stray light particles, or photons, produced by the functioning of lasers inside the device as well as background heat radiation.

To combat noise, the researchers linked their optical computing core to a Generative Adversarial Network, a sort of machine learning network.

The researchers experimented with a variety of noise reduction strategies, including utilizing part of the noise created by the optical computing core as random inputs for the GAN.

The researchers, for example, gave the GAN the job of learning how to handwrite the number "7" in a human-like manner.

The number could not simply be printed in a predetermined typeface on the optical computer.

It had to learn the task in the same way that a kid would, by studying visual examples of handwriting and practicing until it could accurately write the number.

Because the optical computer lacked a human hand for writing, its "handwriting" consisted of creating digital pictures with a style close to but not identical to the examples it had examined.

"Instead of teaching the network to read handwritten numbers, we taught it to write numbers using visual examples of handwriting," said senior author Mo Li, an electrical and computer engineering professor at the University of Washington.

"We also demonstrated that the GAN can alleviate the detrimental effect of optical computing hardware sounds by utilizing a training technique that is resilient to mistakes and noises, with the support of our Duke University computer science teammates.

Furthermore, the network treats the sounds as random input, which is required for the network to create output instances."

The GAN practiced writing "7" until it could do it effectively after learning from handwritten examples of the number seven from a normal AI-training picture collection.

It developed its own writing style along the way and could write numbers from one to ten in computer simulations.

The next stage will be to scale up the gadget using existing semiconductor manufacturing methods.

To attain wafer-scale technology, the team wants to employ an industrial semiconductor foundry rather than build the next iteration of the device in a lab.

A larger-scale gadget will boost performance even further, allowing the study team to undertake more sophisticated activities such as making artwork and even films in addition to handwriting production.

"This optical system represents a computer hardware architecture that can enhance the creativity of artificial neural networks used in AI and machine learning," Li explained.

"More importantly, it demonstrates the viability of this system at a large scale where noise and errors can be mitigated and even harnessed." "AI applications are using so much energy that it will be unsustainable in the future.

This technique has the potential to minimize energy usage, making AI and machine learning more environmentally friendly—as well as incredibly quick, resulting in greater overall performance." Although many people are unaware of it, artificial intelligence (AI) and machine learning are now a part of our regular life online.

Intelligent ranking algorithms, for example, help search engines like Google, video streaming services like Netflix utilize machine learning to customize movie suggestions, and cloud computing data centers employ AI and machine learning to help with a variety of services.

The requirements for AI are many, diverse, and difficult.

As these needs rise, so does the need to improve AI performance while also lowering its energy usage.

The energy costs involved with AI and machine learning on a broad scale may be startling.

Cloud computing data centers, for example, use an estimated 200 terawatt hours per year — enough energy to power a small nation — and this consumption is expected to expand enormously in the future years, posing major environmental risks.

Now, a team lead by associate professor Mo Li of the University of Washington Department of Electrical and Computer Engineering (UW ECE) has developed a method in partnership with academics from the University of Maryland that might help speed up AI while lowering energy and environmental expenses.

The researchers detailed an optical computing core prototype that employs phase-change material in a publication published in Nature Communications on January 4, 2021.

(a substance similar to what CD-ROMs and DVDs use to record information).

Their method is quick, energy-efficient, and capable of speeding up AI and machine learning neural networks.

The technique is also scalable and immediately relevant to cloud computing, which employs AI and machine learning to power common software applications like search engines, streaming video, and a plethora of apps for phones, desktop computers, and other devices.

"The technology we designed is geared to execute artificial neural network algorithms, which are a backbone method for AI and machine learning," Li said.

"This breakthrough in research will make AI centers and cloud computing significantly more energy efficient and speedier."

The team is one of the first in the world to employ phase-change material in optical computing to allow artificial neural networks to recognize images.

Recognizing a picture in a photo is simple for humans, but it requires a lot of computing power for AI.

Image recognition is a benchmark test of a neural network's computational speed and accuracy since it requires a lot of computation.

This test was readily passed by the team's optical computing core, which was running an artificial neural network.

"Optical computing initially surfaced as a concept in the 1980s, but it eventually died in the shadow of microelectronics," said Changming Wu, a graduate student in Li's group.

"It has now been updated due to the end of Moore's law [the discovery that the number of transistors in a dense, integrated circuit doubles every two years], developments in integrated photonics, and the needs of AI computing."

That's a lot of fun." Optical computing is quick because it transmits data at incredible rates using light created by lasers rather than the considerably slower electricity utilized in typical digital electronics.

The prototype built by the study team was created to speed up the computational speed of an artificial neural network, which is measured in billions and trillions of operations per second.

Future incarnations of their technology, according to Li, have the potential to move much quicker.

"This is a prototype, and we're not utilizing the greatest speed possible with optics just yet," Li said.

"Future generations have the potential to accelerate by at least an order of magnitude." Any program powered by optical computing over the cloud — such as search engines, video streaming, and cloud-enabled gadgets — will operate quicker, enhancing performance, in the ultimate real-world use of this technology.

Li's research team took their prototype a step further by sensing light emitted via phase-change material to store data and conduct computer operations.

Unlike transistors in digital electronics, which need a constant voltage to represent and maintain the zeros and ones required in binary computing, phase-change material does not require any energy.

When phase-change material is heated precisely by lasers, it shifts between a crystalline and an amorphous state, much like a CD or DVD.

The material then retains that condition, or "phase," as well as the information that phase conveys (a zero or one), until the laser heats it again.

"There are other competing schemes to construct optical neural networks," Li explained, "but we believe that using phase-changing material has a unique advantage in terms of energy efficiency because the data is encoding in a non-volatile way, meaning that the device does not consume a constant amount of power to store the data."

"Once the info is written there, it stays there indefinitely." You don't need to provide electricity to keep it in place."

This energy savings is important because it is multiplied by millions of computer servers in hundreds of data centers throughout the globe, resulting in a huge decrease in energy consumption and environmental effect.

By patterning the phase-change material used in their optical computing core into nanostructures, the team was able to improve it even further.

These tiny structures increase the material's durability and stability, as well as its contrast (the ability to discriminate between zero and one in binary code) and computing capacity and accuracy.

The optical computer core of the prototype was also completely integrated with phase-change material, thanks to Li's research team.

"We're doing all we can to incorporate optics here," Wu said.

"We layer the phase-change material on top of a waveguide, which is a thin wire that we cut into the silicon chip to channel light.

You may conceive of it as a light-emitting electrical wire or an optical fiber etched into the chip."

Li's research group claims that the technology they created is one of the most scalable methods to optical computing technologies now available, with the potential to be used to massive systems like networked cloud computing servers in data centers across the globe.

"Our design architecture is scalable to a much, much bigger network," Li added, "and can tackle hard artificial intelligence tasks ranging from massive, high-resolution image identification to video processing and video image recognition."

"We feel our system is the most promising and scalable to that degree."

Of course, this will need large-scale semiconductor production.

Our design and the prototype's substance are both extremely compatible with semiconductor foundry procedures."

Looking forward, Li said he could see optical computing devices like the one his team produced boosting current technology's processing capacity and allowing the next generation of artificial intelligence.

To take the next step in that direction, his research team will collaborate closely with UW ECE associate professor Arka Majumdar and assistant professor Sajjad Moazeni, both specialists in large-scale integrated photonics and microelectronics, to scale up the prototype they constructed.

And, once the technology has been scaled up enough, it will lend itself to future integration with energy-intensive data centers, speeding up the performance of cloud-based software applications while lowering energy consumption.

"The computers in today's data centers are already linked via optical fibers.

This enables ultra-high bandwidth transmission, which is critical," Li said.

"Because fiber optics infrastructure is already in place, it's reasonable to do optical computing in such a setup." It's fantastic, and I believe the moment has come for optical computing to resurface."

~ Jai Krishna Ponnappan

You may also want to read more about Artificial Intelligence here.

See also:

Optical Computing, Optical Computing Core, AI, Machine Learning, AI Systems.