Philippa Foot used the term "trolley problem" in 1967 to describe an ethical difficulty.

Artificial intelligence advancements in different domains

have sparked ethical debates regarding how these systems' decision-making

processes should be designed.

Of course, there is widespread worry about AI's capacity to

assess ethical challenges and respect societal values.

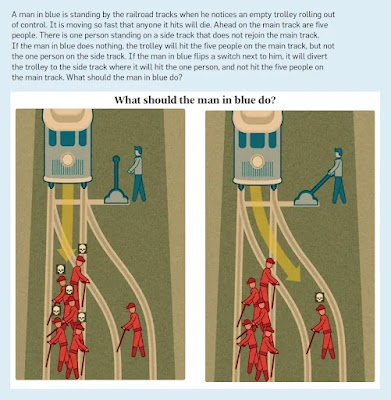

An operator finds herself near a trolley track, standing

next to a lever that determines whether the trolley will continue on its

current path or go in a different direction, in this classic philosophical

thought experiment.

Five people are standing on the track where the trolley is

running, unable to get out of the path and certain to be murdered if the

trolley continues on its current course.

On the opposite track, there is another person who will be

killed if the operator pulls the lever.

This is a typical problem between utilitarianism (activities

should maximize the well-being of affected persons) and deontology (actions

should maximize the well-being of affected individuals) (whether the action is

right or wrong based on rules, as opposed to the consequences of the action).

With the development of artificial intelligence, the issue has arisen how we should teach robots to behave in scenarios that are perceived as inescapable realities, such as the Trolley Problem.

The Trolley Problem has been investigated with relation to

artificial intelligence in fields such as primary health care, the operating

room, security, self-driving automobiles, and weapons technology.

The subject has been studied most thoroughly in the context

of self-driving automobiles, where regulations, guidelines, and norms have

already been suggested or developed.

Because autonomous vehicles have already gone millions of

kilometers in the United States, they face this difficulty.

The problem is made more urgent by the fact that a few self-driving car users have actually died while utilizing the technology.

Accidents have sparked even greater public discussion over

the proper use of this technology.

Moral Machine is an online platform established by a team at

the Massachusetts Institute of Technology to crowdsource responses to issues

regarding how self-driving automobiles should prioritize lives.

The makers of The Moral Machine urge users to the website to

guess what option a self-driving automobile would make in a variety of Trolley

Problem-style problems.

Respondents must prioritize the lives of car passengers,

pedestrians, humans and animals, people walking legally or illegally, and

people of various fitness levels and socioeconomic status, among other

variables.

When respondents are in a car, they almost always indicate

that they would move to save their own lives.

It's possible that crowd-sourced solutions aren't the best method to solve Trolley Problem problems.

Trading a pedestrian life for a vehicle passenger's life,

for example, may be seen as arbitrary and unjust.

The aggregated solutions currently do not seem to represent

simple utilitarian calculations that maximize lives saved or favor one sort of

life over another.

It's unclear who will get to select how AI will be

programmed and who will be held responsible if AI systems fail.

This obligation might be assigned to policymakers, the corporation that develops the technology, or the people who end up utilizing it.

Each of these factors has its own set of ramifications that

must be handled.

The Trolley Problem's usefulness in resolving AI quandaries

is not widely accepted.

The Trolley Problem is dismissed by some artificial

intelligence and ethics academics as a helpful thinking exercise.

Their arguments are usually based on the notion of

trade-offs between different lifestyles.

They claim that the Trolley Problem lends credence to the

idea that these trade-offs (as well as autonomous vehicle disasters) are

unavoidable.

Instead than concentrating on the best methods to avoid a

dilemma like the trolley issue, policymakers and programmers should instead

concentrate on the best ways to react to the different circumstances.

Find Jai on Twitter | LinkedIn | Instagram

You may also want to read more about Artificial Intelligence here.

See also:

Accidents and Risk Assessment; Air Traffic Control, AI and; Algorithmic Bias and Error; Autonomous Weapons Systems, Ethics of; Driverless Cars and Trucks; Moral Turing Test; Robot Ethics.

References And Further Reading

Cadigan, Pat. 2018. AI and the Trolley Problem. New York: Tor.

Etzioni, Amitai, and Oren Etzioni. 2017. “Incorporating Ethics into Artificial Intelligence.” Journal of Ethics 21: 403–18.

Goodall, Noah. 2014. “Ethical Decision Making during Automated Vehicle Crashes.” Transportation Research Record: Journal of the Transportation Research Board 2424: 58–65.

Moolayil, Amar Kumar. 2018. “The Modern Trolley Problem: Ethical and Economically Sound Liability Schemes for Autonomous Vehicles.” Case Western Reserve Journal of Law, Technology & the Internet 9, no. 1: 1–32.