The Macy Conferences on Cybernetics, which ran

from 1946 to 1960, aimed to provide the framework for developing

multidisciplinary disciplines such as cybernetics, cognitive psychology,

artificial life, and artificial intelligence.

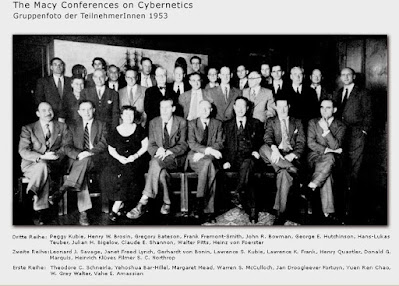

Famous twentieth-century scholars, academics, and

researchers took part in the Macy Conferences' freewheeling debates, including

psychiatrist W.

Ross Ashby, anthropologist Gregory Bateson, ecologist G. Evelyn Hutchinson, psychologist Kurt Lewin, philosopher Donald Marquis, neurophysiologist Warren McCulloch, cultural anthropologist Margaret Mead, economist Oskar Morgenstern, statistician Leonard Savage, physicist Heinz von Foerster McCulloch, a neurophysiologist at the Massachusetts Institute of Technology's Research Laboratory for Electronics, and von Foerster, a professor of signal engineering at the University of Illinois at Urbana-Champaign and coeditor with Mead of the published Macy Conference proceedings, were the two main organizers of the conferences.

All meetings were sponsored by the Josiah Macy Jr. Foundation, a nonprofit organization.

The conferences were started by Macy administrators Frank Fremont-Smith and Lawrence K. Frank, who believed that they would spark multidisciplinary discussion.

The disciplinary isolation of medical research was a major

worry for Fremont-Smith and Frank.

A Macy-sponsored symposium on Cerebral Inhibitions in 1942

preceded the Macy meetings, during which Harvard physiology professor Arturo

Rosenblueth presented the first public discussion on cybernetics, titled

"Behavior, Purpose, and Teleology." The 10 conferences conducted

between 1946 and 1953 focused on biological and social systems' circular

causation and feedback processes.

Between 1954 and 1960, five transdisciplinary Group

Processes Conferences were held as a result of these sessions.

To foster direct conversation amongst participants,

conference organizers avoided formal papers in favor of informal presentations.

The significance of control, communication, and feedback

systems in the human nervous system was stressed in the early Macy Conferences.

The contrasts between analog and digital processing,

switching circuit design and Boolean logic, game theory, servomechanisms, and

communication theory were among the other subjects explored.

These concerns belong under the umbrella of

"first-order cybernetics." Several biological issues were also

discussed during the conferences, including adrenal cortex function,

consciousness, aging, metabolism, nerve impulses, and homeostasis.

The sessions acted as a forum for discussing long-standing

issues in what would eventually be referred to as artificial intelligence.

(At Dartmouth College in 1955, mathematician John McCarthy

invented the phrase "artificial intelligence.") Gregory Bateson, for

example, gave a lecture at the inaugural Macy Conference that differentiated

between "learning" and "learning to learn" based on his

anthropological research and encouraged listeners to consider how a computer might

execute either job.

Attendees in the eighth conference discussed decision theory

research, which was led by Leonard Savage.

Ross Ashby suggested the notion of chess-playing automatons

at the ninth conference.

The usefulness of automated computers as logic models for

human cognition was discussed more than any other issue during the Macy

Conferences.

In 1964, the Macy Conferences gave rise to the American

Society for Cybernetics, a professional organization.

The Macy Conferences' early arguments on feedback methods

were applied to topics as varied as artillery control, project management, and

marital therapy.

Find Jai on Twitter | LinkedIn | Instagram

You may also want to read more about Artificial Intelligence here.

See also:

Cybernetics and AI; Dartmouth AI Conference.

References & Further Reading:

Dupuy, Jean-Pierre. 2000. The Mechanization of the Mind: On the Origins of Cognitive Science. Princeton, NJ: Princeton University Press.

Hayles, N. Katherine. 1999. How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics. Chicago: University of Chicago Press.

Heims, Steve J. 1988. “Optimism and Faith in Mechanism among Social Scientists at the Macy Conferences on Cybernetics, 1946–1953.” AI & Society 2: 69–78.

Heims, Steve J. 1991. The Cybernetics Group. Cambridge, MA: MIT Press.

Pias, Claus, ed. 2016. The Macy Conferences, 1946–1953: The Complete Transactions. Zürich, Switzerland: Diaphanes.