ELIZA is a conversational computer software created

by German-American computer scientist Joseph Weizenbaum at Massachusetts

Institute of Technology between 1964 and 1966.

Weizenbaum worked on ELIZA as part of a groundbreaking

artificial intelligence research team on the DARPA-funded Project MAC, which

was directed by Marvin Minsky (Mathematics and Computation).

Weizenbaum called ELIZA after Eliza Doolittle, a fictitious

character in the play Pygmalion who learns correct English; that play had

recently been made into the successful film My Fair Lady in 1964.

ELIZA was created with the goal of allowing a person to

communicate with a computer system in plain English.

Weizenbaum became an AI skeptic as a result of ELIZA's

popularity among users.

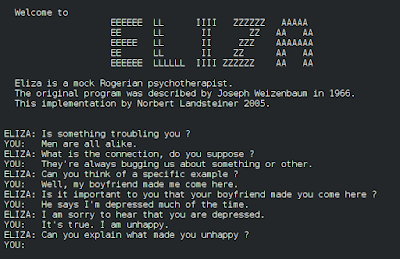

When communicating with ELIZA, users may input any statement

into the system's open-ended interface.

ELIZA will often answer by asking a question, much like a

Rogerian psychologist attempting to delve deeper into the patient's core ideas.

The application recycles portions of the user's comments

while the user continues their chat with ELIZA, providing the impression that

ELIZA is genuinely listening.

Weizenbaum had really developed ELIZA to have a tree-like

decision structure.

The user's statements are first filtered for important

terms.

The terms are ordered in order of significance if more than

one keyword is discovered.

For example, if a user writes in "I suppose everyone

laughs at me," the term "everybody," not "I," is the

most crucial for ELIZA to reply to.

In order to generate a response, the computer uses a

collection of algorithms to create a suitable sentence structure around those

key phrases.

Alternatively, if the user's input phrase does not include

any words found in ELIZA's database, the software finds a content-free comment

or repeats a previous answer.

ELIZA was created by Weizenbaum to investigate the meaning

of machine intelligence.

Weizenbaum derived his inspiration from a comment made by

MIT cognitive scientist Marvin Minsky, according to a 1962 article in

Datamation.

"Intelligence was just a characteristic human observers

were willing to assign to processes they didn't comprehend, and only for as

long as they didn't understand them," Minsky had claimed (Weizenbaum

1962).

If such was the case, Weizenbaum concluded, artificial

intelligence's main goal was to "fool certain onlookers for a while"

(Weizenbaum 1962).

ELIZA was created to accomplish precisely that by giving

users reasonable answers while concealing how little the software genuinely

understands in order to keep the user's faith in its intelligence alive for a

bit longer.

Weizenbaum was taken aback by how successful ELIZA became.

ELIZA's Rogerian script became popular as a program renamed

DOCTOR at MIT and distributed to other university campuses by the late

1960s—where the program was constructed from Weizenbaum's 1965 description

published in the journal Communications of the Association for Computing

Machinery.

The application deceived (too) many users, even those who

were well-versed in its methods.

Most notably, some users grew so engrossed with ELIZA that

they demanded that others leave the room so they could have a private session

with "the" DOCTOR.

But it was the psychiatric community's reaction that made

Weizenbaum very dubious of current artificial intelligence ambitions in

general, and promises of computer comprehension of natural language in

particular.

Kenneth Colby, a Stanford University psychiatrist with whom

Weizenbaum had previously cooperated, created PARRY about the same time that

Weizenbaum released ELIZA.

Colby, unlike Weizenbaum, thought that programs like PARRY

and ELIZA were beneficial to psychology and public health.

They aided the development of diagnostic tools, enabling one

psychiatric computer to treat hundreds of patients, according to him.

Weizenbaum's worries and emotional plea to the community of

computer scientists were eventually conveyed in his book Computer Power and

Human Reason (1976).

Weizenbaum railed against individuals who neglected the

presence of basic distinctions between man and machine in this — at the time —

hotly discussed book, arguing that "there are some things that computers

ought not to execute, regardless of whether computers can be persuaded to do

them" (Weizenbaum 1976, x).

~ Jai Krishna Ponnappan

You may also want to read more about Artificial Intelligence here.

See also:

Chatbots and Loebner Prize; Expert Systems; Minsky, Marvin; Natural Language Processing and Speech Understanding; PARRY; Turing Test

Further Reading:

McCorduck, Pamela. 1979. Machines Who Think: A Personal Inquiry into the History and Prospects of Artificial Intelligence, 251–56, 308–28. San Francisco: W. H. Freeman and Company.

Weizenbaum, Joseph. 1962. “How to Make a Computer Appear Intelligent: Five in a Row Offers No Guarantees.” Datamation 8 (February): 24–26.

Weizenbaum, Joseph. 1966. “ELIZA: A Computer Program for the Study of Natural Language Communication between Man and Machine.” Communications of the ACM 1 (January): 36–45.

Weizenbaum, Joseph. 1976. Computer Power and Human Reason: From Judgment to Calculation. San Francisco: W.H. Freeman and Company