Project SyNAPSE (Systemsof Neuromorphic Adaptive Plastic Scalable Electronics) is a collaborativecognitive computing effort sponsored by the Defense Advanced Research ProjectsAgency to develop the architecture for a brain-inspired neurosynaptic computercore.

The project, which began in 2008, is a collaboration between

IBM Research, HRL Laboratories, and Hewlett-Packard.

Researchers from a number of universities are also involved

in the project.

The acronym SyNAPSE comes from the Ancient Greek word v,

which means "conjunction," and refers to the neural connections that

let information go to the brain.

The project's purpose is to reverse-engineer the functional

intelligence of rats, cats, or potentially humans to produce a flexible,

ultra-low-power system for use in robots.

The initial DARPA announcement called for a machine that

could "scale to biological levels" and break through the

"algorithmic-computational paradigm" (DARPA 2008, 4).

In other words, they needed an electronic computer that

could analyze real-world complexity, respond to external inputs, and do so in

near-real time.

SyNAPSE is a reaction to the need for computer systems that

can adapt to changing circumstances and understand the environment while being

energy efficient.

Scientists at SyNAPSE are working on neuromorphicelectronics systems that are analogous to biological nervous systems and

capable of processing data from complex settings.

It is envisaged that such systems would gain a considerable

deal of autonomy in the future.

The SyNAPSE project takes an interdisciplinary approach,

drawing on concepts from areas as diverse as computational neuroscience,

artificial neural networks, materials science, and cognitive science.

Basic science and engineering will need to be expanded in the following areas by SyNAPSE:

- Architecture—to support structures and functions that arise in biological systems;

- simulation—for the digital replication of systems in order to verify functioning prior to the installation of material neuromorphological systems.

In 2008, IBM Research and HRL Laboratories received the

first SyNAPSE grant.

Various aspects of the grant requirements were subcontracted

to a variety of vendors and contractors by IBM and HRL.

The project was split into four parts, each of which began

following a nine-month feasibility assessment.

The first simulator, C2, was released in 2009 and operated

on a BlueGene/P supercomputer, simulating cortical simulations with 109 neurons

and 1013 synapses, similar to those seen in a mammalian cat brain.

Following a revelation by the Blue Brain Project leader that

the simulation did not meet the complexity claimed, the software was panned.

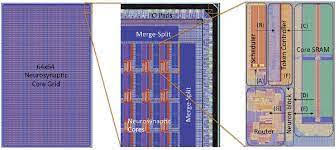

Each neurosynaptic core is 2 millimeters by 3 millimeters in

size and is made up of materials derived from human brain biology.

The cores and actual brains have a more symbolic than

comparable relationship.

Communication replaces real neurons, memory replaces

synapses, and axons and dendrites are replaced by communication.

This enables the team to explain a biological system's

hardware implementation.

HRL Labs stated in 2012 that it has created the world's

first working memristor array layered atop a traditional CMOS circuit.

The term "memristor," which combines the words

"memory" and "transistor," was invented in the 1970s.

Memory and logic functions are integrated in a memristor.

In 2012, project organizers reported the successful

large-scale simulation of 530 billion neurons and 100 trillion synapses on the

Blue Gene/Q Sequoia machine at Lawrence Livermore National Laboratory in

California, which is the world's second fastest supercomputer.

The TrueNorth processor, a 5.4-billion-transistor chip with

4096 neurosynaptic cores coupled through an intrachip network that includes 1

million programmable spiking neurons and 256 million adjustable synapses, was

presented by IBM in 2014.

Finally, in 2016, an end-to-end ecosystem (including

scalable systems, software, and apps) that could fully use the TrueNorth CPU

was unveiled.

At the time, there were reports on the deployment of

applications such as interactive handwritten character recognition and

data-parallel text extraction and recognition.

TrueNorth's cognitive computing chips have now been put to

the test in simulations like a virtual-reality robot driving and playing the

popular videogame Pong.

DARPA has been interested in the construction of

brain-inspired computer systems since the 1980s.

Dharmendra Modha, director of IBM Almaden's Cognitive ComputingInitiative, and Narayan Srinivasa, head of HRL's Center for Neural and Emergent

Systems, are leading the Project SyNAPSE project.

Find Jai on Twitter | LinkedIn | Instagram

You may also want to read more about Artificial Intelligence here.