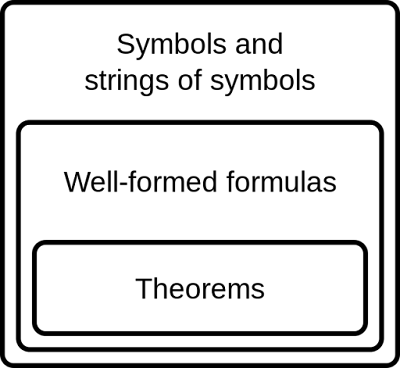

In mathematical and philosophical reasoning, symbolic logic

entails the use of symbols to express concepts, relations, and positions.

Symbolic logic varies from (Aristotelian) syllogistic logic

in that it employs ideographs or a particular notation to "symbolize

exactly the item discussed" (Newman 1956, 1852), and it may be modified

according to precise rules.

Traditional logic investigated the truth and falsehood of

assertions, as well as their relationships, using terminology derived from

natural language.

Unlike nouns and verbs, symbols do not need interpretation.

Because symbol operations are mechanical, they may be

delegated to computers.

Symbolic logic eliminates any ambiguity in logical analysis

by codifying it entirely inside a defined notational framework.

Gottfried Wilhelm Leibniz (1646–1716) is widely regarded as

the founding father of symbolic logic.

Leibniz proposed the use of ideographic symbols instead of

natural language in the seventeenth century as part of his goal to

revolutionize scientific thinking.

Leibniz hoped that by combining such concise universal

symbols (characteristica universalis) with a set of scientific reasoning

rules, he could create an alphabet of human thought that would promote the

growth and dissemination of scientific knowledge, as well as a corpus

containing all human knowledge.

Boolean logic, the logical underpinnings of mathematics, and

decision issues are all topics of symbolic logic that may be broken down into

subcategories.

George Boole, Alfred North Whitehead, and Bertrand Russell,

as well as Kurt Gödel, wrote important contributions in each of these fields.

George Boole published The Mathematical Analysis of Logic

(1847) and An Investigation of the Laws of Thought in the mid-nineteenth

century (1854).

Boole zoomed down on a calculus of deductive reasoning,

which led him to three essential operations in a logical mathematical language

known as Boolean algebra: AND, OR, and NOT.

The use of symbols and operators greatly aided the creation

of logical formulations.

Claude Shannon (1916–2001) employed electromechanical relay

circuits and switches to reproduce Boolean algebra in the twentieth century,

laying crucial foundations in the development of electronic digital computing

and computer science in general.

Alfred North Whitehead and Bertrand Russell established

their seminal work in the subject of symbolic logic in the early twentieth

century.

Their Principia Mathematica (1910, 1912, 1913) demonstrated

how all of mathematics may be reduced to symbolic logic.

Whitehead and Russell developed a logical system from a

handful of logical concepts and a set of postulates derived from those ideas in

the first book of their work.

Whitehead and Russell established all mathematical concepts,

including number, zero, successor of, addition, and multiplication, using

fundamental logical terminology and operational principles like proposition,

negation, and either-or in the second book of the Principia.

The Principia showed how every mathematical postulate might

be inferred from previously explained symbolic logical facts.

Only a few decades later, Kurt Gödel's On Formally

Undecidable Propositions in the Principia Mathematica and Related Systems

(1931) critically analyzed the Principia's strong and deep claims,

demonstrating that Whitehead and Russell's axiomatic system could not be

consistent and complete at the same time.

Even so, it required another important book in symbolic

logic, Ernst Nagel and James Newman's Gödel's Proof (1958), to spread Gödel's

message to a larger audience, including some artificial intelligence

practitioners.

Each of these seminal works in symbolic logic had a

different influence on the development of computing and programming, as well as

our understanding of a computer's capabilities as a result.

Boolean logic has made its way into the design of logic

circuits.

The Logic Theorist program by Simon and Newell provided

logical arguments that matched those found in the Principia Mathematica, and

was therefore seen as evidence that a computer could be programmed to do

intelligent tasks via symbol manipulation.

Gödel's incompleteness theorem raises intriguing issues

regarding how programmed machine intelligence, particularly strong AI, will be

realized in the end.

Find Jai on Twitter | LinkedIn | Instagram

You may also want to read more about Artificial Intelligence here.

See also:

Symbol Manipulation.

References And Further Reading

Boole, George. 1854. Investigation of the Laws of Thought on Which Are Founded the Mathematical Theories of Logic and Probabilities. London: Walton.

Lewis, Clarence Irving. 1932. Symbolic Logic. New York: The Century Co.

Nagel, Ernst, and James R. Newman. 1958. Gödel’s Proof. New York: New York University Press.

Newman, James R., ed. 1956. The World of Mathematics, vol. 3. New York: Simon and Schuster.

Whitehead, Alfred N., and Bertrand Russell. 1910–1913. Principia Mathematica. Cambridge, UK: Cambridge University Press.