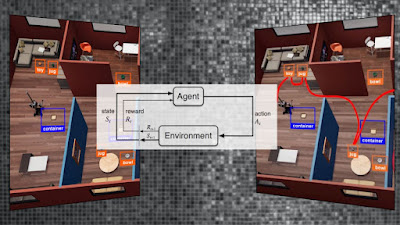

Embodied Artificial Intelligence is a method for developing AI that is both theoretical and practical.

It is difficult to fully trace its his tory due to its

beginnings in different fields.

Rodney Brooks' Intelligence Without Representation, written

in 1987 and published in 1991, is one claimed for the genesis of this concept.

Embodied AI is still a very new area, with some of the first references to it dating back to the early 2000s.

Rather than focusing on either modeling the brain (connectionism/neural

net works) or linguistic-level conceptual encoding (GOFAI, or the Physical

Symbol System Hypothesis), the embodied approach to AI considers the mind (or

intelligent behavior) to emerge from interaction between the body and the

world.

There are hundreds of different and sometimes contradictory approaches to interpret the role of the body in cognition, the majority of which utilize the term "embodied."

The idea that the physical body's

shape is related to the structure and content of the mind is shared by all of

these viewpoints.

Despite the success of neural network or GOFAI (Good Old-Fashioned Artificial Intelligence or classic symbolic artificial intelligence) techniques in building row expert systems, the embodied approach contends that general artificial intelligence cannot be accomplished in code alone.

For example, in a tiny robot with four motors, each driving

a separate wheel, and programming that directs the robot to avoid obstacles,

the same code might create dramatically different observable behaviors if the

wheels were relocated to various areas of the body or replaced with articulated

legs.

This is a basic explanation of why the shape of a body must

be taken into account when designing robotic systems, and why embodied AI

(rather than merely robotics) considers the dynamic interaction between the

body and the surroundings to be the source of sometimes surprising emergent

behaviors.

The instance of passive dynamic walkers is an excellent

illustration of this method.

The passive dynamic walker is a bipedal walking model that

depends on the dynamic interaction of the leg design and the environment's

structure.

The gait is not generated by an active control system.

The walker is propelled forward by gravity, inertia, and the

forms of the feet, legs, and inclination.

This strategy is based on the biological concept of

stigmergy.

- Stigmergy is based on the idea that signs or marks left by actions in the environment inspire future actions.

AN APPROACH INFORMED BY ENGINEERING.

Embodied AI is influenced by a variety of domains. Engineering and philosophy are two frequent methods.

Rodney Brooks proposed the "subsumption

architecture" in 1986, which is a method of generating complex behaviors

by arranging lower-level layers of the system to interact with the environment

in prioritized ways, tightly coupling perception and action, and attempting to

eliminate the higher-level processing of other models.

For example, the Smithsonian's robot Genghis was created to

traverse rugged terrain, a talent that made the design and engineering of other

robots very challenging at the time.

The success of this approach was primarily due to the design

choice to divide the processing of various motors and sensors throughout the

network rather than trying higher-level system integration to create a full

representational model of the robot and its surroundings.

To put it another way, there was no central processing

region where all of the robot's parts sought to integrate data for the system.

Cog, a humanoid torso built by the MIT Humanoid Robotics

Group in the 1990s, was an early effort at embodied AI.

Cog was created to learn about the world by interacting with

it physically.

Cog, for example, may be shown learning how to apply force

and weight to a drum while holding drumsticks for the first time, or learning

how to gauge the weight of a ball once it was put in Cog's hand.

These early notions of letting the body conduct the learning

are still at the heart of the embodied AI initiative.

The Swiss Robots, created and constructed in the AI Lab at

Zurich University, are perhaps one of the most prominent instances of embodied

emergent intelligence.

Simple small robots with two motors (one on each side) and

two infrared sensors, the Swiss Robots (one on each side).

The only high-level instructions in their programming were

that if a sensor detected an item on one side, it should move in the other

direction.

However, when combined with a certain body form and sensor

location, this resulted in what seemed to be high-level cleaning or clustering

behavior in certain situations.

A similar strategy is used in many other robotics projects.

Shakey the Robot, developed by SRI International in the

1960s, is frequently credited as being the first mobile robot with thinking

ability.

Shakey was clumsy and sluggish, and he's often portrayed as

the polar antithesis of what embodied AI is attempting to achieve by moving

away from higher-level thinking and processing.

However, even in 1968, SRI's approach to embodiment was a

clear forerunner of Brooks', since they were the first to assert that the

finest reservoir of knowledge about the actual world is the real world itself.

The greatest model of the world is the world itself,

according to this notion, which has become a rallying cry against higher-level

representation in embodied AI.

Earlier robots, in contrast to the embodied AI software,

were mostly preprogrammed and did not actively interface with their environs in

the manner that this method does.

Honda's ASIMO robot, for example, isn't an excellent

illustration of embodied AI; instead, it's representative of other and older

approaches to robotics.

Work in embodied AI is exploding right now, with Boston

Dynamics' robots serving as excellent examples (particularly the non-humanoid

forms).

Embodied AI is influenced by a number of philosophical

ideas.

Rodney Brooks, a roboticist, particularly rejects

philosophical influence on his technical concerns in a 1991 discussion of his

subsumption architecture, while admitting that his arguments mirror

Heidegger's.

In several essential design aspects, his ideas match those

of phenom enologist Merleau-Ponty, demonstrating how earlier philosophical issues

at least reflect, and likely shape, much of the design work in contemplating

embodied AI.

Because of its methodology in experimenting toward an

understanding of how awareness and intelligent behavior originate, which are

highly philosophical activities, this study in embodied robotics is deeply

philosophical.

Other clearly philosophical themes may be found in a few

embodied AI projects as well.

Rolf Pfeifer and Josh Bongard, for example, often draw to

philosophical (and psychological) literature in their work, examining how these

ideas intersect with their own methods to developing intelligent machines.

They discuss how these ideas may (and frequently do not)

guide the development of embodied AI.

This covers a broad spectrum of philosophical inspirations,

such as George Lakoff and Mark Johnson's conceptual metaphor work, Shaun

Gallagher's (2005) body image and phenomenology work, and even John Dewey's

early American pragmatism.

It's difficult to say how often philosophical concerns drive

engineering concerns, but it's clear that the philosophy of embodiment is

probably the most robust of the various disciplines within cognitive science to

have done embodiment work, owing to the fact that theorizing took place long

before the tools and technologies were available to actually realize the

machines being imagined.

This suggests that for roboticists interested in the strong

AI project, that is, broad intellectual capacities and functions that mimic the

human brain, there are likely still unexplored resources here.

You may also want to read more about Artificial Intelligence here.

See also:

Brooks, Rodney; Distributed and Swarm Intelligence; General and Narrow AI.

Further Reading:

Brooks, Rodney. 1986. “A Robust Layered Control System for a Mobile Robot.” IEEE Journal of Robotics and Automation 2, no. 1 (March): 14–23.

Brooks, Rodney. 1990. “Elephants Don’t Play Chess.” Robotics and Autonomous Systems 6, no. 1–2 (June): 3–15.

Brooks, Rodney. 1991. “Intelligence Without Representation.” Artificial Intelligence Journal 47: 139–60.

Dennett, Daniel C. 1997. “Cog as a Thought Experiment.” Robotics and Autonomous Systems 20: 251–56.

Gallagher, Shaun. 2005. How the Body Shapes the Mind. Oxford: Oxford University Press.

Pfeifer, Rolf, and Josh Bongard. 2007. How the Body Shapes the Way We Think: A New View of Intelligence. Cambridge, MA: MIT Press.