Anne Foerst (1966–) is a Lutheran clergyman, theologian, author, and computer science professor at Allegany, New York's St. Bonaventure University.

In 1996, Foerst earned a doctorate in theology from the

Ruhr-University of Bochum in Germany.

She has worked as a research associate at Harvard Divinity

School, a project director at MIT, and a research scientist at the

Massachusetts Institute of Technology's Artificial Intelligence Laboratory.

She supervised the God and Computers Project at MIT, which

encouraged people to talk about existential questions brought by scientific

research.

Foerst has written several scientific and popular pieces on

the need for improved conversation between religion and science, as well as

shifting concepts of personhood in the light of robotics research.

God in the Machine, published in 2004, details her work as a

theological counselor to the MIT Cog and Kismet robotics teams.

Foerst's study has been influenced by her work as a hospital

counselor, her years at MIT collecting ethnographic data, and the writings of

German-American Lutheran philosopher and theologian Paul Tillich.

As a medical counselor, she started to rethink what it meant to be a "normal" human being.

Foerst was inspired to investigate the circumstances under

which individuals are believed to be persons after seeing variations in

physical and mental capabilities in patients.

In her work, Foerst contrasts between the terms

"human" and "person," with human referring to members of

our biological species and per son referring to a person who has earned a form

of reversible social inclusion.

Foerst uses the Holocaust as an illustration of how personhood must be conferred but may also be revoked.

As a result, personhood is always vulnerable.

Foerst may explore the inclusion of robots as individuals

using this personhood schematic—something people bestow to each other.

Tillich's ideas on sin, alienation, and relationality are

extended to the connections between humans and robots, as well as robots and

other robots, in her work on robots as potential people.

- People become alienated, according to Tillich, when they ignore opposing polarities in their life, such as the need for safety and novelty or freedom.

- People reject reality, which is fundamentally ambiguous, when they refuse to recognize and interact with these opposing forces, cutting out or neglecting one side in order to concentrate entirely on the other.

- People are alienated from their lives, from the people around them, and (for Tillich) from God if they do not accept the complicated conflicts of existence.

The threat of reducing all things to items or data that can

be measured and studied, as well as the possibility to enhance people's

capacity to create connections and impart identity, are therefore opposites of

danger and opportunity in AI research.

Foerst has attempted to establish a dialogue between theology and other structured fields of inquiry, following Tillich's paradigm.

Despite being highly welcomed in labs and classrooms,

Foerst's work has been met with skepticism and pushback from some concerned

that she is bringing counter-factual notions into the realm of science.

These concerns are crucial data for Foerst, who argues for a

mutualistic approach in which AI researchers and theologians accept strongly

held preconceptions about the universe and the human condition in order to have

fruitful discussions.

Many valuable discoveries come from these dialogues,

according to Foerst's study, as long as the parties have the humility to admit

that neither side has a perfect grasp of the universe or human existence.

Foerst's work on AI is marked by humility, as she claims that researchers are startled by the vast complexity of the human person while seeking to duplicate human cognition, function, and form in the figure of the robot.

The way people are socially rooted, socially conditioned,

and socially accountable adds to the complexity of any particular person.

Because human beings' embedded complexity is intrinsically

physical, Foerst emphasizes the significance of an embodied approach to AI.

Foerst explored this embodied technique while at MIT, where

having a physical body capable of interaction is essential for robotic research

and development.

When addressing the evolution of artificial intelligence,

Foerst emphasizes a clear difference between robots and computers in her work

(AI).

Robots have bodies, and those bodies are an important aspect

of their learning and interaction abilities.

Although supercomputers can accomplish amazing analytic jobs

and participate in certain forms of communication, they lack the ability to

learn through experience and interact with others.

Foerst is dismissive of research that assumes intelligent

computers may be created by re-creating the human brain.

Rather, she contends that bodies are an important part of

intellect.

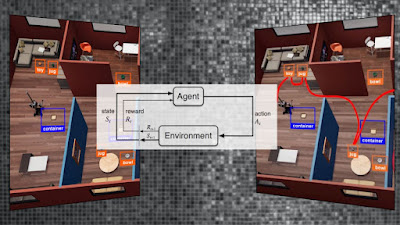

Foerst proposes for growing robots in a way similar to human

child upbringing, in which robots are given opportunities to interact with and

learn from the environment.

This process is costly and time-consuming, just as it is for

human children, and Foerst reports that funding for creative and time-intensive

AI research has vanished, replaced by results-driven and military-focused

research that justifies itself through immediate applications, especially since

the terrorist attacks of September 11, 2001.

Foerst's work incorporates a broad variety of sources,

including religious texts, popular films and television programs, science

fiction, and examples from the disciplines of philosophy and computer science.

Loneliness, according to Foerst, is a fundamental motivator

for humans' desire of artificial life.

Both fictional imaginings of the construction of a

mechanical companion species and con actual robotics and AI research are driven

by feelings of alienation, which Foerst ties to the theological position of a

lost contact with God.

Academic opponents of Foerst believe that she has replicated

a paradigm initially proposed by German theologian and scholar Rudolph Otto in

his book The Idea of the Holy (1917).

The heavenly experience, according to Otto, may be

discovered in a moment of attraction and dread, which he refers to as the

numinous.

Critics contend that Foerst used this concept when she

claimed that humans sense attraction and dread in the figure of the robot.

You may also want to read more about Artificial Intelligence here.

See also:

Embodiment, AI and; Nonhuman Rights and Personhood; Pathetic Fallacy; Robot Ethics; Spiritual Robots.

Further Reading:

Foerst, Anne. 2005. God in the Machine: What Robots Teach Us About Humanity and God. New York: Plume.

Geraci, Robert M. 2007. “Robots and the Sacred in Science Fiction: Theological Implications of Artificial Intelligence.” Zygon 42, no. 4 (December): 961–80.

Gerhart, Mary, and Allan Melvin Russell. 2004. “Cog Is to Us as We Are to God: A Response to Anne Foerst.” Zygon 33, no. 2: 263–69.

Groks Science Radio Show and Podcast with guest Anne Foerst. Audio available online at http://ia800303.us.archive.org/3/items/groks146/Groks122204_vbr.mp3. Transcript available at https://grokscience.wordpress.com/transcripts/anne-foerst/.

Reich, Helmut K. 2004. “Cog and God: A Response to Anne Foerst.” Zygon 33, no. 2: 255–62.