- To arrive at a solution, General Problem Solver is a software for a problem-solving method that employs means-ends analysis and planning.

- To solve issues, General Problem Solver uses two heuristic techniques:

- Artificial Intelligence Problem Solving

- AI problem-solving steps:

- General Problem Solver Overview

- Frequently Asked Questions:

- References and Further Reading:

To arrive at a solution, General Problem Solver is a software for a problem-solving method that employs means-ends analysis and planning.

The software was created in such a way that the

problem-solving process is separated from information unique to the situation

at hand, allowing it to be used to a wide range of issues.

The software, which was first created by Allen Newell and

Herbert Simon in 1957, took over a decade to complete.

George W. Ernst, a graduate student at Newell, wrote the

latest edition while doing research for his dissertation in 1966.

The General Problem Solver arose from Newell and Simon's work on the Logic Theorist, another problem-solving tool.

The duo likened Logic Theorist's problem-solving approach to

that of people solving comparable issues after inventing it.

They discovered that the logic theorist's method varied

significantly from that of humans.

Newell and Simon developed General Problem Solver using the

knowledge on human problem solving obtained from these experiments, hoping that

their artificial intelligence work would contribute to a better understanding

of human cognitive processes.

They discovered that human problem-solvers could look at the

intended outcome and, using both backward and forward thinking, decide actions

they might take to get closer to that outcome, resulting in the development of

a solution.

The General Problem Solver, which Newell and Simon felt was not just reflective of artificial intelligence but also a theory of human cognition, included this mechanism.

To solve issues, General Problem Solver uses two heuristic techniques:

- means-ends analysis and

- planning.

As an example of means-ends analysis in action, consider the following:

- If a person coveted a certain book, they would want to be in possession of it.

- The book is currently kept by the library, and they do not have it in their possession.

- The individual has the ability to eliminate the gap between their existing and ideal states.

- They may do so by borrowing the book from the library, and they have other alternatives for getting there, including driving.

- If the book has been checked out by another customer, however, there are possibilities for obtaining it elsewhere.

- To buy it, the consumer may go to a bookshop or order it online.

- The individual must next consider the many possibilities open to them.

- And so on.

The individual is aware of a number of pertinent activities

they may do, and if they select the right ones and carry them out in the right

sequence, they will be able to receive the book.

Means ends analysis in action is the person who chooses and

implements suitable activities.

The programmer sets up the issue as a starting state and a state to be attained when using means-ends analysis with General Problem Solver.

The difference between these two states is calculated using

the General Problem Solver (called objects).

- Operators that lessen the difference between the two states must also be coded into the General Problem Solver.

- It picks and implements an operator to address the issue, then assesses if the operation has got it closer to its objective or ideal state.

- If that's the case, it'll go on to the next operator.

- If it doesn't work, it may go back and try another operator.

- The difference between the original state and the target state is decreased to zero by applying operators.

- The capacity to plan was also held by General Problem Solver.

General Problem Solver might sketch a solution to the problem by removing the specifics of the operators and the difference between the starting and desired states.

After a broad solution had been defined, the specifics could

be reinserted into the issue, and the subproblems formed by these details could

be addressed within the solution guide lines produced during the outlining

step.

Defining an issue and operators to program the General

Problem Solver was a time-consuming task for programmers.

It also meant that, as a theory of human cognition or an

example of artificial intelligence, General Problem Solver took for granted the

very actions that, in part, represent intelligence, namely the acts of defining

a problem and selecting relevant actions (or operations) from an infinite

number of possible actions in order to solve the problem.

In the mid-1960s, Ernst continued to work on General Problem Solver.

He wasn't interested in human problem-solving procedures;

instead, he wanted to discover a way to broaden the scope of General Problem

Solver so that it could solve issues outside of the logic domain.

In his version of General Problem Solver, the intended state

or object was expressed as a set of constraints rather than an explicit

specification.

Ernst also altered the form of the operators such that the

output of an operator may be written as a function of the starting state or

object (the input).

His updated General Problem Solver was only somewhat

successful in solving problems.

Even on basic situations, it often ran out of memory.

"We do believe that this specific aggregation of IPL-Vcode should be set to rest, as having done its bit in furthering our knowledge

of the mechanics of intelligence," Ernst and Newell proclaimed in the

foreword of their 1969 book GPS: A Case Study in Generality and Problem Solving(Ernst and Newell 1969, vii).

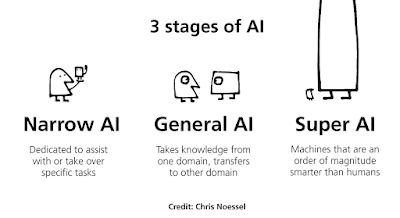

Artificial Intelligence Problem Solving

The AI reflex agent converts states into actions.

When these agents fail to function in an environment where the state of mapping is too vast for the agent to handle, the stated issue is resolved and passed to a problem-solving domain, which divides the huge stored problem into smaller storage areas and resolves them one by one.

The targeted objectives will be the final integrated action.

Different sorts of issue-solving agents are created and used at an atomic level without any internal state observable with a problem-solving algorithm based on the problem and their working area.

By describing issues and many solutions, the problem-solving agent executes exactly.

So we may say that issue solving is a subset of artificial intelligence that includes a variety of problem-solving approaches such as tree, B-tree, and heuristic algorithms.

A problem-solving agent is also known as a goal-oriented agent since it is constantly focused on achieving the desired outcomes.

AI problem-solving steps:

The nature of people and their behaviors are intimately related to the AI dilemma.

To solve a problem, we require a set of discrete steps, which makes human labor simple. These are the actions that must be taken to fix a problem:

- Goal formulation is the first and most basic stage in addressing a problem.

It arranges discrete stages to establish a target/goals that need some action in order to be achieved.

AI agents are now used to formulate the aim.

One of the most important processes in issue resolution is problem formulation, which determines what actions should be followed to reach the specified objective.

This essential aspect of AI relies on a software agent, which consists of the following components to construct the issue.

Components needed to formulate the problem:

This state necessitates a beginning state for the challenge, which directs the AI agent toward a certain objective.

In this scenario, new methods also use a particular class to solve the issue area.

Action: In this step of issue formulation, all feasible actions are performed using a function with a specified class obtained from the starting state.

Transition: In this step of issue formulation, the actual action performed by the previous action stage is combined with the final stage to be sent to the next stage.

Objective test: This step assesses if the integrated transition model accomplished the given goal or not; if it did, halt the action and go to the next stage to estimate the cost of achieving the goal.

Path costing is a component of problem-solving that assigns a numerical value to the expense of achieving the objective.

It necessitates the purchase of all gear, software, and human labor.

General Problem Solver Overview

In theory, GPS may solve any issue that can be described as a collection of well-formed formulas (WFFs) or Horn clauses that create a directed graph with one or more sources (that is, axioms) and sinks (that is, desired conclusions).

Predicate logic proofs and Euclidean geometry problem spaces are two primary examples of the domain in which GPS may be used.

It was based on the theoretical work on logic machines by Simon and Newell.

GPS was the first computer software to segregate its issue knowledge (expressed as input data) from its problem-solving method (a generic solver engine).

IPL, a third-order programming language, was used to build GPS.

While GPS was able to tackle small issues like the Hanoi Towers that could be adequately described, it was unable to handle any real-world problems since search was quickly lost in the combinatorial explosion.

Alternatively, the number of "walks" across the inferential digraph became computationally prohibitive.

(In fact, even a simple state space search like the Towers of Hanoi may become computationally infeasible, however smart pruning of the state space can be accomplished using basic AI methods like A* and IDA*.)

In order to solve issues, the user identified objects and procedures that might be performed on them, and GPS created heuristics via means-ends analysis.

- It concentrated on the available processes, determining which inputs and outputs were acceptable.

- It then established sub goals in order to move closer to the ultimate objective.

- The GPS concept ultimately developed into the Soar artificial intelligence framework.

You may also want to read more about Artificial Intelligence here.

See also:

Expert Systems; Simon, Herbert A.

Frequently Asked Questions:

In Artificial Intelligence, what is a generic problem solver?

Herbert Simon, J.C. Shaw, and Allen Newell suggested the General Problem Solver (GPS) as an AI software. It was the first useful computer software in the field of artificial intelligence. It was intended to function as a global problem-solving machine.

What is the procedure for using the generic issue solver?

In order to solve issues, the user identified objects and procedures that might be performed on them, and GPS created heuristics via means-ends analysis. It concentrated on the available processes, determining which inputs and outputs were acceptable.

What exactly did the General Problem Solver accomplish?

The General Problem Solver (GPS) was their next effort, which debuted in 1957. GPS would use heuristic approaches (modifiable "rules of thumb") repeatedly to a problem and then undertake a "means-ends" analysis at each stage to see whether it was getting closer to the intended answer.

What are the three key domain universal issue-solving heuristics that Newell and Simon's general problem solver incorporated in 1972?

According to Newell and Simon (1972), every issue has a problem space that is described by three components: 1) the issue's beginning state; 2) a collection of operators for transforming a problem state; 3) a test to see whether a problem state is a solution.

What is heuristic search and how does it work?

Heuristic search is a kind of strategy for finding the best solution to a problem by searching a solution space. The heuristic here use some mechanism for searching the solution space while determining where the solution is most likely to be found and concentrating the search on that region.

What are the elements of a broad issue?

The issue itself, articulated clearly and with sufficient context to explain why it is significant; the way of fixing the problem, frequently presented as a claim or a working thesis; and the goal, declaration of objective, and scope of the paper the writer is writing.

What are the stages of a basic development process employing a problem-solving approach?

Problem-Solving Process in 8 Steps:

Step 1: Identify the issue. What exactly is the issue?

Step 2: Identify the issue.

Step 3: Establish the objectives.

Step 4: Determine the problem's root cause.

Step 5: Make a plan of action.

Step 6: Put your plan into action.

Step 7: Assess the Outcomes

Step 8: Always strive to improve.

What's the difference between heuristic and algorithmic problem-solving?

A step-by-step technique for addressing a given issue in a limited number of steps is known as an algorithm. Given the same parameters, an algorithm's outcome (output) is predictable and repeatable (input). A heuristic is an informed assumption that serves as a starting point for further investigation.

What makes algorithms superior than heuristics?

Heuristics entail using a learning and discovery strategy to obtain a solution, while an algorithm is a clearly defined set of instructions for solving a problem. Use an algorithm if you know how to solve an issue.

References and Further Reading:

Barr, Avron, and Edward Feigenbaum, eds. 1981. The Handbook of Artificial Intelligence, vol. 1, 113–18. Stanford, CA: HeurisTech Press.

Ernst, George W., and Allen Newell. 1969. GPS: A Case Study in Generality and Problem Solving. New York: Academic Press.

Newell, Allen, J. C. Shaw, and Herbert A. Simon. 1960. “Report on a General Problem Solving Program.” In Proceedings of the International Conference on Information Processing (June 15–20, 1959), 256–64. Paris: UNESCO.

Simon, Herbert A. 1991. Models of My Life. New York: Basic Books.

Simon, Herbert A., and Allen Newell. 1961. “Computer Simulation of Human Thinking and Problem Solving.” Datamation 7, part 1 (June): 18–20, and part 2 (July): 35–37