- What Is SSLV?

- What Is The Origin And Evolution Of SSLV?

- Where Is The SSLV Launch Complex?

- What Is The History Of The SSLV?

- How Will The Small Satellite Launch Vehicle (SSLV) Be Manufactured?

- What Are The Unique Features Of The Small Satellite Launch Vehicle (SSLV)?

- What Are The Expected Benefits Of The SSLV Rocket?

- What Is The Operational Performance History Of The SSLV?

- What Was The Performance Outcome Of The SSLV D1 Mission?

- What Were The Overall Lessons From The SSLV-D1/EOS-02 Mission?

- When Is The SSLV D2 Planned To Lift Off?

- What Is SSLV?

- What Is The Origin And Evolution Of SSLV?

- Where Is The SSLV Launch Complex?

- What Is The History Of The SSLV?

- How Will The Small Satellite Launch Vehicle (SSLV) Be Manufactured?

- What Are The Unique Features Of The Small Satellite Launch Vehicle (SSLV)?

- What Are The Expected Benefits Of The SSLV Rocket?

- What Is The Operational Performance History Of The SSLV?

- What Was The Performance Outcome Of The SSLV D1 Mission?

- What Were The Overall Lessons From The SSLV-D1/EOS-02 Mission?

- When Is The SSLV D2 Planned To Lift Off?

- What Is SSLV?

- What Is The Origin And Evolution Of SSLV?

- Where Is The SSLV Launch Complex?

- What Is The History Of The SSLV?

- How Will The Small Satellite Launch Vehicle (SSLV) Be Manufactured?

- What Are The Unique Features Of The Small Satellite Launch Vehicle (SSLV)?

- What Are The Expected Benefits Of The SSLV Rocket?

- What Is The Operational Performance History Of The SSLV?

- What Was The Performance Outcome Of The SSLV D1 Mission?

- What Were The Overall Lessons From The SSLV-D1/EOS-02 Mission?

- When Is The SSLV D2 Planned To Lift Off?

What Is SSLV?

The Small Satellite Launch Vehicle (SSLV) is an ISRO-developed small-lift launch vehicle with a payload capacity of 500 kg (1,100 lb) to low Earth orbit (500 km (310 mi)) or 300 kg (660 lb) to Sun-synchronous orbit (500 km (310 mi)) for launching small satellites, as well as the ability to support multiple orbital drop-offs.

SSLV is designed with low cost and quick turnaround in mind, with launch-on-demand flexibility and minimum infrastructure needs.

The SSLV-D1 launched from the First Launch Pad on August 7, 2022, but failed to reach orbit.

SSLV launches to Sun-synchronous orbit will be handled in the future by the SSLV Launch Complex (SLC) at Kulasekharapatnam in Tamil Nadu.

After entering the operational phase, the vehicle's manufacture and launch operations would be handled by an Indian consortium led by NewSpace India Limited (NSIL).

What Is The Origin And Evolution Of SSLV?

The SSLV was created with the goal of commercially launching small satellites at a far lower cost and with a greater launch rate than the Polar Satellite Launch Vehicle (PSLV).

SSLV has a development cost of 169.07 crore (US$21 million) and a production cost of 30 crore (US$3.8 million) to 35 crore (US$4.4 million).

The expected high launch rate is based on mostly autonomous launch operations and simplified logistics in general.

In comparison, a PSLV launch employs 600 officials, but SSLV launch procedures are overseen by a tiny crew of about six persons.

The SSLV's launch preparation phase is predicted to be less than a week rather than months.

The launch vehicle may be erected vertically, similar to the current PSLV and Geosynchronous Satellite Launch Vehicle (GSLV), or horizontally, similar to the decommissioned Satellite Launch Vehicle (SLV) and Augmented Satellite Launch Vehicle (ASLV).

The vehicle's initial three stages employ HTPB-based solid propellant, with a fourth terminal stage consisting of a Velocity-Trimming Module (VTM) with eight 50 N reaction control thrusters and eight 50 N axial thrusters for altering velocity.

SSLV's first and third stages (SS1) are novel, while the second stage (SS2) is derived from PSLV's third stage (HPS3).

Where Is The SSLV Launch Complex?

Early developmental flights and those to inclined orbits would launch from Sriharikota, first from existing launch pads and ultimately from a new facility in Kulasekharapatnam known as the SSLV Launch Complex (SLC).

In October 2019, tenders for production, installation, assembly, inspection, testing, and Self Propelled Launching Unit (SPU) were announced.

When completed, this proposed spaceport at Kulasekharapatnam in Tamil Nadu would handle SSLV launches to Sun-synchronous orbit.

What Is The History Of The SSLV?

Rajaram Nagappa recommended the development route of a 'Small Satellite Launch Vehicle-1' to launch strategic payloads in a National Institute of Advanced Studies paper in 2016.

S. Somanath, then-Director of Liquid Propulsion Systems Centre, acknowledged a need for identifying a cost-effective launch vehicle configuration with 500 kg payload capacity to LEO at the National Space Science Symposium in 2016, and development of such a launch vehicle was underway by November 2017.

The vehicle design was completed by the Vikram Sarabhai Space Centre (VSSC) in December 2018.

All booster segments for the SSLV first stage (SS1) static test (ST01) were received in December 2020 and assembled in the Second Vehicle Assembly Building (SVAB).

On March 18, 2021, the SS1 first-stage booster failed its first static fire test (ST01).

Oscillations were detected about 60 seconds into the test, and the nozzle of the SS1 stage disintegrated after 95 seconds.

The test was supposed to last 110 seconds.

SSLV's solid first stage SS1 must pass two consecutive nominal static fire tests in order to fly.

In August 2021, the SSLV Payload Fairing (SPLF) functional certification test was completed.

On 14 March 2022, the second static fire test of SSLV first stage SS1 was performed at SDSC-SHAR and satisfied the specified test goals.

How Will The Small Satellite Launch Vehicle (SSLV) Be Manufactured?

ISRO has begun development of a Small Satellite Launch Vehicle to serve the burgeoning global small satellite launch service industry.

NSIL would be responsible for manufacturing SSLV via Indian industry partners.

What Are The Unique Features Of The Small Satellite Launch Vehicle (SSLV)?

SSLV has been intended to suit "Launch on Demand" criteria while being cost-effective.

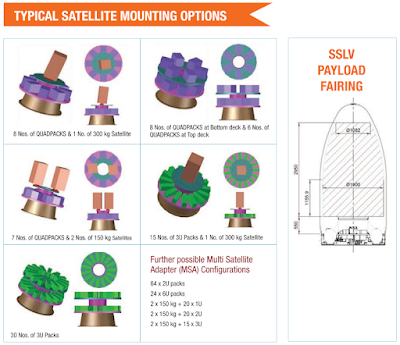

It is a three-stage all-solid vehicle capable of launching up to 500 kilograms satellites into 500 km LEO.

What Are The Expected Benefits Of The SSLV Rocket?

Reduced Turn-around Time Launch on Demand Cost Optimization.

Realization and Operation Ability to accommodate several satellites.

Minimum infrastructure required for launch Design practices that have stood the test of time.

The first flight from SDSC SHAR was originally scheduled during the fourth quarter of 2019. It occurred only in August of 2022.

Following the first developmental flights, ISRO plans to produce SSLV via Indian Industries through its commercial arm, NSIL.

What Is The Operational Performance History Of The SSLV?

The SSLV's maiden developmental flight was place on August 7, 2022.

SSLV-D1 was the name of the flying mission.

The SSLV-D1 flight's mission goals were not met.

The rocket featured three stages and a fourth Velocity Trimming Module (VTM).

The rocket stood 34m tall, with a diameter of 2m, and a lift-off mass of 120t in its D1 version.

The rocket launched EOS 02, a 135 kilograms Earth observation satellite, and AzaadiSAT, an 8 kg CubeSat payload designed by Indian students to promote inclusion in STEM education.

The SSLV-D1 was planned to deploy the two satellite payloads in a circular orbit with a height of 356.2 km and an inclination of 37.2°.

The ISRO's stated reason for the mission's failure was software failure.

The mission software identified an accelerometer anomaly during the second stage separation, according to the ISRO.

As a result, the rocket navigation switched from closed loop to open loop guidance.

Despite the fact that this change in guiding mode was part of the redundancy incorporated into the rocket's navigation, it was not enough to save the mission.

During open loop guiding mode, the last VTM stage only fired for 0.1s rather than the required 20s.

As a result, the two satellites and the rocket's VTM stage were injected into an unstable elliptical 35676 km orbit.

The SSLV-final D1's VTM stage had 16 hydrazine-fueled (MMH+MON3) thrusters.

Eight of them were to regulate the orbital velocity and the other eight were to control the altitude.

During the orbital insertion maneuvers, the VTM stage also controlled pitch, yaw, and roll.

The SSLV-three D1's major stages all worked well.

However, this was insufficient to provide enough thrust for the two satellite payloads to establish stable orbits.

The VTM stage required to burn for at least 20 seconds to impart enough extra orbital velocity and altitude adjustments to put the two satellite payloads into their designated stable orbits.

Instead, the VTM activated at 653.5s and shut down at 653.6s after lift-off.

After the VTM stage was partially fired, the EOS 02 was released at 738.5s and AazadiSAT at 788.4s after liftoff.

These failures occurred, causing the satellites to reach an unstable orbit and then be destroyed upon reentry.

What Was The Performance Outcome Of The SSLV D1 Mission?

SSLV's maiden developmental flight.

The mission goal was a circular orbit of 356.2 km height and 37.2° inclination.

Two satellite payloads were carried on the trip.

- The 135-kilogram EOS-02 Earth observation satellite

- and the 8-kilogram AzaadiSAT CubeSat.

Due to sensor failure and flaws in onboard software, the stage and two satellite payloads were put into an unstable elliptical orbit of 35676 km and then destroyed upon reentry.

The mission software, according to the ISRO, failed to detect and rectify a sensor malfunction in the VTM stage.

The last VTM stage only fired momentarily (0.1s).

What Were The Overall Lessons From The SSLV-D1/EOS-02 Mission?

Mission ISRO developed a small satellite launch vehicle (SSLV) to launch up to 500 kilograms satellites into Low Earth Orbits on a 'launch-on-demand' basis .

The SSLV-D1/EOS-02 Mission's first developmental flight was slated for August 7, 2022, at 09:18 a.m.

(IST) from the Satish Dhawan Space Centre's First Launch Pad in Sriharikota.

The SSLV-D1 mission would send EOS-02, a 135 kilograms satellite, into a low-Earth orbit 350 kilometers above the equator at an inclination of roughly 37 degrees.

The mission also transports the AzaadiSAT satellite.

SSLV is built with three solid stages weighing 87 t, 7.7 t, and 4.5 t.

The satellite is inserted into the desired orbit using a liquid propulsion-based velocity trimming module.

- SSLV is capable of launching Mini, Micro, or Nanosatellites (weighing between 10 and 500 kg) into a 500 km planar orbit.

- SSLV gives low-cost on-demand access to space.

- It has a quick turnaround time, the ability to accommodate numerous satellites, the ability to launch on demand, minimum launch infrastructure needs, and so on.

SSLV-D1 is a 34-meter-tall, 2-meter-diameter vehicle with a lift-off mass of 120 tonnes.

ISRO developed and built the EOS-02 earth observation satellite.

This microsat class satellite provides superior optical remote sensing with excellent spatial resolution in the infrared spectrum.

The bus configuration is based on the IMS-1 bus.

AzaadiSAT is an 8U Cubesat that weighs around 8 kg.

It transports 75 distinct payloads, each weighing roughly 50 grams and performing femto-experiments.

These payloads were built with the help of female students from rural areas around the nation.

The payloads were assembled by the "Space Kidz India" student team.

A UHF-VHF Transponder operating on ham radio frequency to allow amateur radio operators to transmit speech and data, a solid state PIN diode-based Radiation counter to detect the ionizing radiation in its orbit, a long-range transponder, and a selfie camera are among the payloads.

The data from this satellite was planned to be received using the ground system built by 'Space Kidz India.'

Both satellite missions have failed as a result of the failure of SSLV-D1's terminal stage.

When Is The SSLV D2 Planned To Lift Off?

The SSLV's second developmental flight is planned for November of 2022.

It is intended to transport four Blacksky Global satellites weighing 56 kg to a 500 km circular orbit with a 50° inclination.

It will place the X-ray polarimeter satellite into low Earth orbit(LEO).

Find Jai on Twitter | LinkedIn | Instagram

You may also want to read more about space based systems here.