- Issues Covered At The Conference.

- Mechanization of Thought Processes

- Dartmouth Summer Research Project

- Context Of The Dartmouth AI Conference:

- List Of Dartmouth AI Conference Attendees:

- See also:

- References & Further Reading:

- Issues Covered At The Conference.

- Mechanization of Thought Processes

- Dartmouth Summer Research Project

- Context Of The Dartmouth AI Conference:

- List Of Dartmouth AI Conference Attendees:

- See also:

- References & Further Reading:

The Dartmouth Conference on Artificial Intelligence,

officially known as the "Dartmouth Summer Research Project on Artificial

Intelligence," was held in 1956 and is frequently referred to as the AI

Constitution.

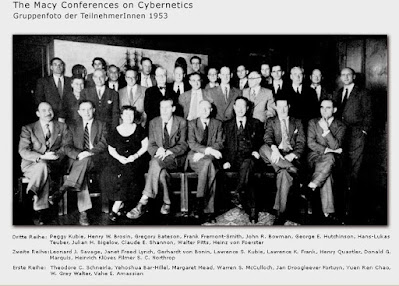

- The multidisciplinary conference, held on the Dartmouth College campus in Hanover, New Hampshire, brought together specialists in cybernetics, automata and information theory, operations research, and game theory.

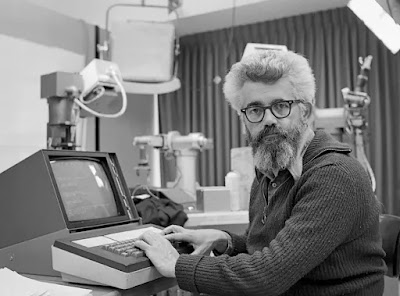

- Claude Shannon (the "father of information theory"), Marvin Minsky, John McCarthy, Herbert Simon, Allen Newell ("founding fathers of artificial intelligence"), and Nathaniel Rochester (architect of IBM's first commercial scientific mainframe computer) were among the more than twenty attendees.

- Participants came from the MIT Lincoln Laboratory, Bell Laboratories, and the RAND Systems Research Laboratory.

The Rockefeller Foundation provided a substantial portion of the funding for the Dartmouth Conference.

The Dartmouth Conference, which lasted around two months,

was envisaged by the organizers as a method to make quick progress on computer

models of human cognition.

- "Every facet of learning or any other trait of intelligence may in theory be so clearly characterized that a computer can be constructed to replicate it," organizers said as a starting point for their deliberations (McCarthy 1955, 2).

- In his Rockefeller Foundation proposal a year before to the summer meeting, mathematician and principal organizer John McCarthy created the phrase "artificial intelligence." McCarthy subsequently said that the new name was intended to establish a barrier between his study and the discipline of cybernetics.

- He was a driving force behind the development of symbol processing techniques to artificial intelligence, which were at the time in the minority.

- In the 1950s, analog cybernetic techniques and neural networks were the most common brain modeling methodologies.

Issues Covered At The Conference.

The Dartmouth Conference included a broad variety of issues, from complexity theory and neuron nets to creative thinking and unpredictability.

- The conference is notable for being the site of the first public demonstration of Newell, Simon, and Clifford Shaw's Logic Theorist, a program that could independently verify theorems stated in Bertrand Russell and Alfred North Whitehead's Principia Mathematica.

- The only program at the conference that tried to imitate the logical features of human intellect was Logic Theorist.

- Attendees predicted that by 1970, digital computers would have become chess grandmasters, discovered new and important mathematical theorems, produced passable language translations and understood spoken language, and composed classical music.

- Because the Rockefeller Foundation never received a formal report on the conference, the majority of information on the events comes from memories, handwritten notes, and a few papers authored by participants and published elsewhere.

Mechanization of Thought Processes

Following the Dartmouth Conference, the British National Physical Laboratory (NPL) hosted an international conference on "Mechanization of Thought Processes" in 1958.

- Several Dartmouth Conference attendees, including Minsky and McCarthy, spoke at the NPL conference.

- Minsky mentioned the Dartmouth Conference's relevance in the creation of his heuristic software for solving plane geometry issues and the switch from analog feedback, neural networks, and brain modeling to symbolic AI techniques at the NPL conference.

- Neural networks did not resurface as a research topic until the mid-1980s.

Dartmouth Summer Research Project

The Dartmouth Summer Research Project on Artificial Intelligence was a watershed moment in the development of AI.

The Dartmouth Summer Research Project on Artificial Intelligence, which began in 1956, brought together a small group of scientists to kick off this area of study.

To mark the occasion, more than 100 researchers and academics gathered at Dartmouth for AI@50, a conference that celebrated the past, appraised current achievements, and helped seed ideas for future artificial intelligence research.

John McCarthy, then a mathematics professor at the College, convened the first gathering.

The meeting would "continue on the basis of the premise that any facet of learning or any other attribute of intelligence may in theory be so clearly characterized that a computer can be constructed to replicate it," according to his plan.

The director of AI@50, Professor of Philosophy James Moor, explains that the researchers who came to Hanover 50 years ago were thinking about methods to make robots more aware and sought to set out a framework to better comprehend human intelligence.

Context Of The Dartmouth AI Conference:

Cybernetics, automata theory, and sophisticated information processing were all terms used in the early 50s to describe the science of "thinking machines."

The wide range of names reflects the wide range of intellectual approaches.

In, John McCarthy, a Dartmouth College Assistant Professor of Mathematics, wanted to form a group to clarify and develop ideas regarding thinking machines.

- For the new field, he chose the moniker 'Artificial Intelligence.' He picked the term mainly to escape a concentration on limited automata theory and cybernetics, which was largely focused on analog feedback, as well as the possibility of having to accept or dispute with the aggressive Norbert Wiener as guru.

- McCarthy addressed the Rockefeller Foundation in early to seek money for a summer seminar at Dartmouth that would attract roughly 150 people.

- In June, he and Claude Shannon, then at Bell Labs, met with Robert Morison, Director of Biological and Medical Research, to explore the concept and potential financing, but Morison was skeptical if money would be made available for such a bold initiative.

McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon officially proposed the proposal in September. The term "artificial intelligence" was coined as a result of this suggestion.

According to the proposal,

- We suggest that during the summer of at Dartmouth College in Hanover, New Hampshire, a -month, -man artificial intelligence research be conducted.

- The research will be based on the hypothesis that any part of learning, or any other characteristic of intelligence, can be characterized exactly enough for a computer to imitate it.

- It will be attempted to figure out how to get robots to speak, develop abstractions and ideas, solve issues that are now reserved for people, and improve themselves.

- We believe that if a properly chosen group of scientists worked on one or more of these topics together for a summer, considerable progress might be accomplished.

- Computers, natural language processing, neural networks, theory of computing, abstraction, and creativity are all discussed further in the proposal (these areas within the field of artificial intelligence are considered still relevant to the work of the field).

He remarked, "We'll focus on the difficulty of figuring out how to program a calculator to construct notions and generalizations.

Of course, this is subject to change once the group meets." Ray Solomonoff, Oliver Selfridge, Trenchard More, Arthur Samuel, Herbert A. Simon, and Allen Newell were among the participants at the meeting, according to Stottler Henke Associates.

The real participants arrived at various times, most of which were for far shorter periods of time.

- Rochester was replaced for three weeks by Trenchard More, and MacKay and Holland were unable to attend—but the project was prepared to commence.

- Around June of that year, the first participants (perhaps simply Ray Solomonoff, maybe with Tom Etter) came to Dartmouth College in Hanover, New Hampshire, to join John McCarthy, who had already set up residence there.

- Ray and Marvin remained at the Professors' apartments, while the most of the guests stayed at the Hanover Inn.

List Of Dartmouth AI Conference Attendees:

- Ray Solomonoff

- Marvin Minsky

- John McCarthy

- Claude Shannon

- Trenchard More

- Nat Rochester

- Oliver Selfridge

- Julian Bigelow

- W. Ross Ashby

- W.S. McCulloch

- Abraham Robinson

- Tom Etter

- John Nash

- David Sayre

- Arthur Samuel

- Kenneth R. Shoulders

- Shoulders' friend

- Alex Bernstein

- Herbert Simon

- Allen Newell

~ Jai Krishna Ponnappan

You may also want to read more about Artificial Intelligence here.

See also:

Cybernetics and AI; Macy Conferences; McCarthy, John; Minsky, Marvin; Newell, Allen; Simon, Herbert A.

References & Further Reading:

Crevier, Daniel. 1993. AI: The Tumultuous History of the Search for Artificial Intelligence. New York: Basic Books.

Gardner, Howard. 1985. The Mind’s New Science: A History of the Cognitive Revolution. New York: Basic Books.

Kline, Ronald. 2011. “Cybernetics, Automata Studies, and the Dartmouth Conference on Artificial Intelligence.” IEEE Annals of the History of Computing 33, no. 4 (April): 5–16.

McCarthy, John. 1955. “A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence.” Rockefeller Foundation application, unpublished.

Moor, James. 2006. “The Dartmouth College Artificial Intelligence Conference: The Next Fifty Years.” AI Magazine 27, no. 4 (Winter): 87–91.

Solomonoff, R.J.The Time Scale of Artificial Intelligence; Reflections on Social Effects, Human Systems Management, Vol 5 1985, Pp 149-153

Moor, J., The Dartmouth College Artificial Intelligence Conference: The Next Fifty years, AI Magazine, Vol 27, No., 4, Pp. 87-9, 2006

http://raysolomonoff.com/dartmouth/boxbdart/dart56ray812825who.pdf 1956

personal communication